This e-portfolio serves as a comprehensive record of my work and the learning outcomes achieved throughout the Machine Learning module in August 2023 at the University of Essex. To facilitate navigation and provide an overview, the following table presents a list of all the requisite activities:

| Component | Chapter |

|---|---|

| Collaborative Discussion 1: The 4th Industrial Revolution | Collaborative Discussion 1: The 4th Industrial Revolution |

| e-Portfolio Activity: Correlation and Regression | Correlation and Regression |

| Wiki Activity: Clustering | Wiki Activity: Clustering |

| e-Portfolio Activity: Jaccard Coefficient Calculations | Jaccard Coefficient Calculations |

| e-Portfolio Activity: Perceptron Activities | Perceptron Activities |

| e-Portfolio Activity: Gradient Cost Function | Gradient Cost Function |

| Collaborative Discussion 2: Legal and Ethical views on ANN applications | Collaborative Discussion 2: Legal and Ethical views on ANN applications |

| e-Portfolio Activity - CNN Model Activity | CNN Model Activity |

| e-Portfolio Activity: Model Performance Measurement | Model Performance Measurement |

| Individual contributions - Development Team Project: Project Report | Development Team Project: Project Report |

| Individual contributions - Development Team Project: Team Presentation | Development Team Project: Team Presentation |

| Reflective piece | Reflective Peace |

Collaborative Discussion 1: The 4th Industrial Revolution

This chapter encompasses the introductory post, peer responses written by me, and my summary post for Collaborative Discussion 1 on the topic of the 4th Industrial Revolution.

Initial Post

Amid escalating environmental concerns, Industry 4.0 is potent in combatting climate change. This transformative shift in industrial processes, characterised by integrating digital technologies, data-driven decisions, and advanced automation, holds significant promise in curbing carbon emissions and fostering sustainability across sectors (Ben Youssef, 2020). Hermann et al. (2016) state that Industry 4.0 rests on principles like interoperability, virtualization, decentralization, real-time capability, service orientation, and modularity.

An essential merit of Industry 4.0 lies in its prowess to amplify resource efficiency. Industries can oversee energy consumption, optimise production, and curtail waste generation through sensor deployment and IoT devices (Bai et al., 2020). Interconnected systems guarantee seamless value chain operations. Analyzing real-time data allows precise operational tuning, culminating in diminished energy use and fewer greenhouse gas emissions. Industry 4.0-enabled predictive maintenance extends equipment lifecycles, minimizing replacements and their carbon footprint.

Furthermore, Industry 4.0 propels smart grid and energy management system advancement. Renewable energy integration, e.g., solar panels and wind turbines, benefits from sophisticated monitoring and control mechanisms. These technologies optimise energy distribution, reducing fossil fuel reliance and traditional energy source emissions.

Additionally, Industry 4.0 champions circular economy models through closed-loop production systems. Heightened traceability ensures products are monitored throughout their lifespan, facilitating efficient recycling and repurposing. Standardised, interchangeable components streamline customization, scalability, and maintenance.

In sum, Industry 4.0 heralds myriad avenues for mitigating climate change repercussions. By refining resource usage, bolstering energy efficiency, and endorsing circular economy principles, this technological shift equips industries to contribute significantly to global sustainability aims. However, realizing these gains necessitates robust collaboration among policymakers, industries, and researchers. The formulation of comprehensive frameworks and regulations, as advocated by Fritzsche et al. (2018), becomes pivotal for the responsible integration of Industry 4.0 in climate-centric endeavours.

References

Bai, C., Dallasega, P., Orzes, G. & Sarkis, J. (2020) Industry 4.0 technologies assessment: A sustainability perspective. International journal of production economics, 229, p.107776.

Ben Youssef, A. (2020) How can Industry 4.0 contribute to combatting climate change?. Revue d'économie industrielle, (169), pp.161-193.

Fritzsche, K., Niehoff, S., & Beier, G. (2018). Industry 4.0 and Climate Change—Exploring the Science-Policy Gap. Sustainability, 10(12): 4511. doi:10.3390/su10124511.

Hermann, M., Pentek, T. and Otto, B. (2016) Design principles for industrie 4.0 scenarios. In 2016 49th Hawaii international conference on system sciences (HICSS) (pp. 3928-3937). IEEE.

Peer Response 1

Thank you Giuseppe, for your comprehensive overview of the impact of Industry 4.0 on trading, highlighting the integration of advanced technologies such as AI, IoT, Blockchain, and Big Data analytics. You discuss the positive transformations these technologies have brought about in trading practices, including algorithmic and high-frequency trading, enhanced decision-making, secure transactions, and personalised customer experiences. Moreover, you outline the challenges associated with these advancements, including concerns about market fairness, privacy violations, legal complexities, regulatory inconsistencies, job displacement, and ethical dilemmas.

The ethical dimensions of Big Data analytics have gained prominence in recent years, particularly concerning their implications for individuals. Someh (2019) identifies privacy as a primary concern in this context, emphasizing the importance of individuals controlling how organizations access, modify, and utilise their data. Furthermore, trust is a pivotal element in ethical data usage, with issues such as unauthorised monitoring and unsolicited intrusions being highlighted as potential trust eroders. Ethical dilemmas arise when individuals lack awareness of the purposes and processes involved in Big Data analytics. Lastly, the impact of Big Data analytics on individual choices is a complex issue, as it may both restrict choices and raise concerns about autonomy. As outlined by Someh (2019), these ethical considerations underscore the evolving landscape of ethical challenges in the era of Big Data analytics, challenging traditional ethical paradigms, as pointed out by Zwitter (2014).

References

Someh, I., Davern, M., Breidbach, C.F. and Shanks, G. (2019) Ethical issues in big data analytics: A stakeholder perspective. Communications of the Association for Information Systems, 44(1), p.34.

Zwitter, A. (2014). Big Data ethics. Big Data & Society, 1(2).

Peer Response 2

Thank you for your insightful post on the impact of Industry 4.0 on agriculture. Your use of current literature provides a strong foundation for the discussion and showcases your commitment to staying up-to-date with the latest research in the field.

Your post highlights the convergence of Industry 4.0 and agriculture, particularly Agriculture 4.0. This transformation promises to revolutionise conventional farming practices and address various issues, including farm productivity, environmental sustainability, food security, and crop losses (Rose et al., 2021). However, it is worth considering whether there are concrete estimates of the savings or efficiency gains that can be achieved with Industry 4.0 in agriculture. Quantifying the potential economic and environmental benefits of these technologies could strengthen your argument and provide a more precise picture of the impact of Agriculture 4.0.

Additionally, you touched on the environmental challenges in agriculture. At least in Switzerland, the sector needs help to meet federal government targets related to biodiversity, nutrient surpluses, emissions, and water quality (Baur & Flückiger, 2018). Are there strategies to ensure that local savings are effectively utilised and do not lead to a simple distribution over a larger area, potentially exacerbating existing environmental issues?

In summary, your post is well-researched and provides an excellent overview of the impact of Industry 4.0 on agriculture. However, incorporating concrete savings estimates and addressing strategies to balance efficiency gains with environmental sustainability would enhance the depth of your analysis.

References

Baur, P. and Flückiger, S. (2018) Nahrungsmittel aus ökologischer und tiergerechter Produktion–Potential des Standortes Schweiz.

Rose, D.C., Wheeler, R., Winter, M., Lobley, M. and Chivers, C.A. (2021) Agriculture 4.0: Making it work for people, production, and the planet. Land use policy, 100, p.104933.

Summary Post

The advent of Industry 4.0, characterised by the integration of digital technologies, data-driven decisions, and advanced automation, represents a pivotal advancement in addressing climate change (Bai et al., 2020). This transformative paradigm holds the potential to make substantial contributions to climate change mitigation across several key areas.

First and foremost, Industry 4.0 promises resource efficiency (Ben Youssef, 2020). By deploying sensors and Internet of Things (IoT) devices, industries can meticulously monitor energy consumption, optimise production processes, and significantly reduce waste. The real-time data analysis enables precise operational adjustments, reducing energy consumption and decreasing greenhouse gas emissions (Hermann et al., 2016). Furthermore, predictive maintenance strategies extend the lifespan of equipment, effectively reducing the need for replacements and the carbon footprint associated with them (Ben Youssef, 2020).

Industry 4.0 also plays a crucial role in advancing energy management systems (Bai et al., 2020). It facilitates the integration of renewable energy sources, such as solar panels and wind turbines, into the energy grid. This optimisation of energy distribution ultimately reduces dependence on fossil fuels and a corresponding decrease in traditional energy source emissions.

Moreover, Industry 4.0 actively promotes circular economy models by enabling closed-loop production systems. Enhanced traceability ensures that products are monitored throughout their entire lifecycle, making efficient recycling and repurposing possible. Standardised and interchangeable components streamline customisation, scalability, and maintenance.

However, the journey towards realising the full potential of Industry 4.0 in climate change mitigation has its challenges. Cybersecurity, data privacy, and the socio-economic implications of automation must be addressed (Frey & Osbourne, 2017; Schwab & Zahidi, 2020; Culot et al., 2019; Morrar et al., 2017). Moreover, the critical need for effective change management and public acceptance of these transformative technologies should not be underestimated.

Industry 4.0 could revolutionise how we address climate change. Its multifaceted benefits, ranging from resource efficiency and energy management to circular economy promotion, can be harnessed to create a more sustainable and resilient future. However, to fully unlock these benefits, addressing the challenges and collaborating on the regulatory, educational, and change management fronts is essential (Fritzsche et al., 2018).

References

Bai, C., Dallasega, P., Orzes, G. & Sarkis, J. (2020) Industry 4.0 technologies assessment: A sustainability perspective. International journal of production economics, 229, p.107776.

Ben Youssef, A. (2020) How can Industry 4.0 contribute to combatting climate change?. Revue d'économie industrielle, (169), pp.161-193.

Culot, G., Fattori, F., Podrecca, M., & Sartor, M. (2019). Addressing industry 4.0 cybersecurity challenges. IEEE Engineering Management Review, 47(3), 79-86.

Fritzsche, K., Niehoff, S., & Beier, G. (2018). Industry 4.0 and Climate Change—Exploring the Science-Policy Gap. Sustainability, 10(12): 4511. doi:10.3390/su10124511.

Frey, C. B., & Osborne, M. A. (2017). The Future of Employment: How Susceptible are Jobs to Computerisation? Technological Forecasting and Social Change 114: 254–280. DOI: https://doi.org/10.1016/j.techfore.2016.08.019

Hermann, M., Pentek, T. and Otto, B. (2016) Design principles for industrie 4.0 scenarios. In 2016 49th Hawaii international conference on system sciences (HICSS) (pp. 3928-3937). IEEE.

Morrar, R., Arman, H., & Mousa, S. (2017). The fourth industrial revolution (Industry 4.0): A social innovation perspective. Technology innovation management review, 7(11), 12-20.

Schwab, K. & Zahidi, S. (2020) The Future of Jobs Report. World Economic Forum. Available from: https://www3.weforum.org/docs/WEF_Future_of_Jobs_2020.pdf [Accessed 21 October 2023]

Correlation and Regression

This chapter includes my contributions to the e-Portfolio Activity focused on Correlation and Regression.

Pearson Correlation

The Pearson correlation (Freedman et al., 2007) is defined as

where is the correlation coefficient, is the values of the x-variable in the sample, is the mean of the x-variable. Likewise is and the variable of the sample respectively its mean values.

The Pearson correlation coefficient is dimensionless, meaning it remains consistent regardless of the scale of the variables involved. Its values fall within the range of -1 to +1. The interpretation of the Pearson correlation coefficient was introduced by Cohen (1992), who established thresholds for effect sizes. He categorised an r-value of 0.1 as indicating a small effect size, 0.3 as medium, and 0.5 as large. Cohen emphasised that a medium effect size is typically discernible to the naked eye of a keen observer.

import numpy as np

from matplotlib import pyplot as plt

import seaborn as sns

from scipy.stats import pearsonr

def print_pearson_cor(data1, data2):

# calculate covariance matrix

covariance = np.cov(data1, data2)

# calculate Pearson's correlation

corr, _ = pearsonr(data1, data2)

# plot

plt.scatter(data1, data2)

plt.show()

# summarize

print('data1: mean=%.3f stdv=%.3f' % (np.mean(data1), np.std(data1)))

print('data2: mean=%.3f stdv=%.3f' % (np.mean(data2), np.std(data2)))

print('Covariance: %.3f' % covariance[0][1])

print('Pearsons correlation: %.3f' % corr)# Positive Correlation

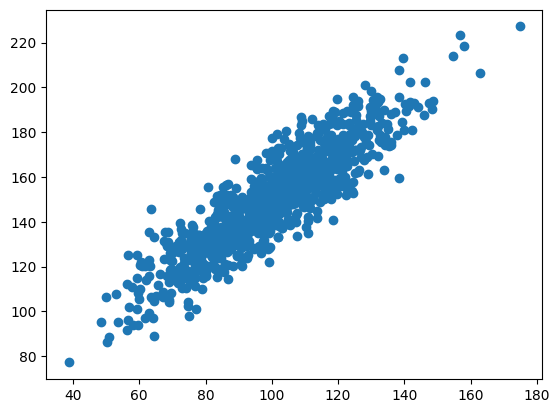

data1 = 20 * np.random.randn(1000) + 100

data2 = data1 + (10 * np.random.randn(1000) + 50)

print_pearson_cor(data1, data2)

# Negative Correlation

data1 = 20 * np.random.randn(1000) + 100

data2 = -data1 + (10 * np.random.randn(1000) + 50)

print_pearson_cor(data1, data2)

# No Correlation (Random Data)

data1 = 20 * np.random.randn(1000) + 100

data2 = np.random.randn(1000)

print_pearson_cor(data1, data2)# Create a nonlinear relationship between two variables

data1 = np.linspace(0, 10, 100)

data2 = data1**2 + 3 * np.sin(data1) + np.random.normal(0, 2, 100)

print_pearson_cor(data1, data2)

# Create a complex nonlinear relationship between two variables

data1 = np.linspace(0, 2 * np.pi, 100)

data2 = 2 * np.sin(data1) + 0.5 * np.cos(3 * data1) + np.random.normal(0, 0.2, 100)

print_pearson_cor(data1, data2)

Goodwin and Leech (2007) delineate and illustrate six key factors influencing the magnitude of a Pearson correlation:

| Factors | Explanation |

|---|---|

| Amount of Variability | The degree of variation in the data points plays a role in determining the strength of the correlation. Greater variability can reduce the correlation's apparent size. |

| Differences in Distribution Shapes | When the two datasets being correlated have different distribution shapes (e.g., one is normally distributed, and the other is skewed), this can influence the correlation value. |

| Lack of Linearity | The Pearson correlation measures linear relationships. If the relationship between the variables is not linear, the correlation coefficient may not accurately represent their association. |

| Presence of Outliers | Outliers, which are extreme data points that do not conform to the general pattern, can significantly impact the correlation. A single outlier can inflate or deflate the correlation. |

| Sample Characteristics | The characteristics of the sample can affect the correlation. For example, a small or unrepresentative sample might lead to less reliable correlation estimates. |

| Measurement Error | Measurement errors in the data introduce noise and can attenuate the strength of the correlation. Accurate measurements are essential for robust correlation analysis. |

In conclusion, it's essential to underscore that correlations discovered in correlational research studies should not be construed as indicative of causal relationships between two variables. Pearson correlation is not well-suited for nominal or ordinal data since it assumes that the variables are continuous and normally distributed.

Linear Regression

Linear regression analysis is employed in empirical research to ascertain and model the interrelatedness between two variables, thereby enabling the estimation of one variable's value predicated on the value of another. The variable subject to prediction is referred to as the "dependent variable," while the variable harnessed to forecast the value of the former is designated as the "independent variable." This statistical technique serves as a fundamental tool for modeling and quantifying the linear associations between variables in quantitative investigations.

Linear Regression Function:

where is the dependent variable, is the independent variable, is the slope of the line (the coefficient of the independent variable) and is the intercept (the value of the dependent variable when the independent variable is 0).

Please note: Sometimes the linear regression function is defined as , since it is possible to interchange the order of and without changing the underlying linear relationship between the variables. The and values are simply constants that determine the slope and intercept of the line, and their order in the equation does not affect the linear relationship.

The slope of the regression line is defines as

where is the pearson correlation coefficient, is the standard deviation of and the standard deviation .

With the slope being known, the Y-Intercept can be calculated as

where is the mean of the sample, and is the mean of the sample.

In Python, the scipy library can be used for linear regression:

from scipy import stats

x = [5,7,8,7,2,17,2,9,4,11,12,9,6]

y = [99,86,87,88,111,86,103,87,94,78,77,85,86]

slope, intercept, r, p, std_err = stats.linregress(x, y)The variable slope contains the result of the calculation of ., intercept contains the result of the calculation of , r is the pearson correlation coefficien and p represents the p-value associated with a hypothesis test. In this test, the null hypothesis posits that the slope is equal to zero. The p-value is derived from a Wald Test utilizing a t-distribution to evaluate the significance of the test statistic. std_err contains the standard error of the estimated slope (gradient), assuming that residuals are normally distributed.

With the above definitions, implementing the function for linear regression in python is easy:

def predict(x):

return slope * x + interceptReferences

Cohen, J., 1992. Methods in psychology. A power primer. Psychological Bulletin, 112(1), pp.155-159.

Freedman, D., Pisani, R. and Purves, R., 2007. Statistics: Fourth international student edition. W.W. Norton & Company

Goodwin, L. D., & Leech, N. L. (2006). Understanding Correlation: Factors That Affect the Size of r. The Journal of Experimental Education, 74(3), 249-266. doi:10.3200/jexe.74.3.249-266

Wiki Activity: Clustering

As depicted in the animation available on Moodle, there are issues related to initial centroids in K-Means clustering. The choice of initial centroids algorithm can significantly affect the final clustering results (Celebi et al., 2013):

Sensitivity to Initialization: K-Means can converge to different solutions depending on the initial centroid locations. Different initial centroids may end up with different clusters. This sensitivity to initialization is one of the limitations of K-Means.

Local Optima: K-Means optimization is sensitive to local optima. It can get stuck in a suboptimal solution if the initial centroids are poorly chosen. This is a common problem when dealing with high-dimensional data.

Solution Bias: Biased or inappropriate initial centroids can lead to clustering results that do not reflect the actual underlying structure of the data. This is especially problematic if the initialization is influenced by prior knowledge or assumptions.

To mitigate these issues, researchers like Celebi et al. (2013) have proposed various methods to select better initial centroids, such as K-Means++, which selects centroids that are more spread out across the data distribution, or using hierarchical clustering to initialise centroids. It's essential to be aware of the impact of initialization and potentially run K-Means with different initializations to assess the stability of the results.

References

Celebi, M.E., Kingravi, H.A. and Vela, P.A. (2013) A comparative study of efficient initialization methods for the k-means clustering algorithm. Expert systems with applications, 40(1), pp.200-210.

Jaccard Coefficient Calculations

The Jaccard coefficient (Jaccard, 1902) is a valuable tool for measuring similarity in data with binary or categorical attributes. It's ideal for set data, binary data, or sparse data. However, it's not suitable for continuous or ordinal data, high-dimensional data, and imbalanced categories. The Jaccard coefficient is defined as

where is the Jaccard coefficient (), is set 1 and is set 2.

Please note, calculation for multisets is slightly different.

The Jaccard distance, a measure of dissimilarity between sets, is the complement of the Jaccard coefficient,

The following table

| Name | Gender | Fever | Cough | Test-1 | Test-2 | Test-3 | Test-4 |

|---|---|---|---|---|---|---|---|

| Jack | M | Y | N | P | N | N | A |

| Mary | F | Y | N | P | A | P | N |

| Jim | M | Y | P | N | N | N | A |

In this dataset, gender is considered a symmetric attribute, meaning it doesn't factor into the analysis. The focus lies on the remaining attributes, which are characterised as asymmetric ternary variables using the values Y (typically representing "Yes"), N ("No"), and A. The interpretation of A can vary and may stand for "Absent," "Not applicable,". Given the pathological context, A might also stand for "Adjusted," or "Abnormal". Therefore Y and P is treated as 1, and N and A as 0.

The following Python code calculculates the Jaccard Coefficient for all combinations:

jack = ['Fever', 'Test-1']

mary = ['Fever', 'Test-1', 'Test-3']

jim = ['Fever', 'Cough']

def jaccard_similarity(l1, l2):

return len(set(l1).intersection(set(l2))) / len(set(l1).union(set(l2)))

print("Jaccard similarity (Jack, Mary):", jaccard_similarity(jack, mary))

print("Jaccard similarity (Jack, Jim):", jaccard_similarity(jack, jim))

print("Jaccard similarity (Jim, Mary):", jaccard_similarity(jim, mary))| Pair | Jaccard Coefficient | Jaccard Distance |

|---|---|---|

| (Jack, Mary) | 0.6666666666666666 | 0.3333333333333333 |

| (Jack, Jim) | 0.3333333333333333 | 0.6666666666666666 |

| (Jim, Mary) | 0.25 | 0.75 |

Factors that Influence the Jaccard Coefficient (Fletcher & Islam, 2018):

| Factors | Explanation |

|---|---|

| Set Size | The size of the sets being compared can significantly influence the Jaccard coefficient. Larger sets tend to have more potential for overlap, potentially leading to higher coefficients. |

| Data Preprocessing | The way data is prepared can impact the Jaccard coefficient. Data cleaning and normalization are essential to ensure accurate comparisons. |

| Thresholds | Setting a similarity threshold is crucial. What level of overlap between sets is considered significant? The choice of threshold affects the interpretation of the coefficient. |

| Attribute Selection | Determining which attributes or elements are included in the sets can influence the Jaccard coefficient. Including or excluding specific attributes can lead to different results. |

| Domain Knowledge | Understanding the domain and the specific problem is vital. Some attributes might be more relevant than others, and this knowledge can guide attribute selection and preprocessing. |

| Scaling | Scaling can impact the Jaccard coefficient. For example, if comparing numeric values, scaling techniques like Min-Max scaling may be necessary to make the comparison meaningful. |

| Handling Missing Data | How missing data is handled can impact the Jaccard coefficient. Imputation or exclusion of data points should be considered. |

Ethical considerations

The dataset used in this example contains sensitive personal data subject to GDPR regulations. Data Scientists must obtain consent or anonymise such data before use. Notably, attributes like gender can lead to unfair outcomes, necessitating careful consideration of their inclusion.

Additionally, the application of the Jaccard Coefficient in this context lacks clear purpose, diminishing transparency. Overall, using similarity measures like the Jaccard Coefficient for profiling individuals or groups may raise concerns regarding surveillance, discrimination, and unfair targeting, requiring ethical scrutiny.

References

Fletcher, S. and Islam, M.Z. (2018) Comparing sets of patterns with the Jaccard index. Australasian Journal of Information Systems, 22.

Jaccard, P. (1902) Lois de distribution florale dans la zone alpine. Bull Soc Vaudoise Sci Nat, 38, pp.69-130.

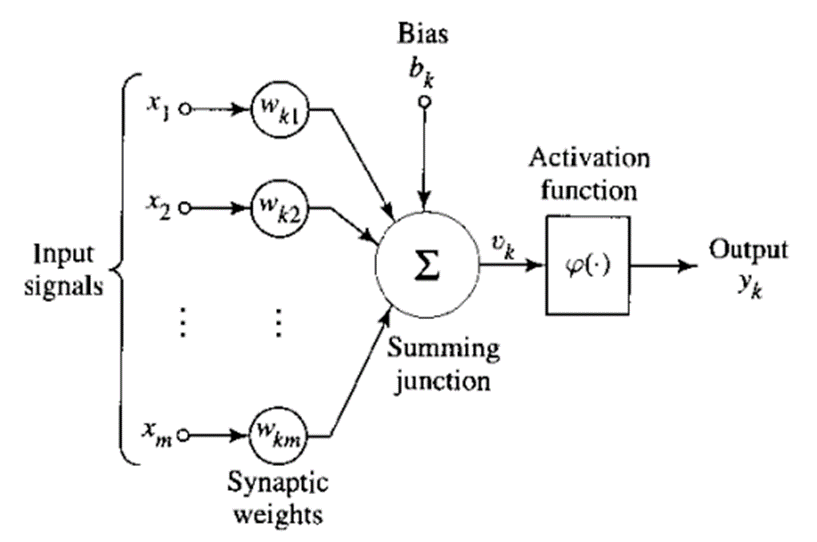

Perceptron Activities

A perceptron is one of the simplest artificial neural network models, introduced by Rosenblatt (1958). It is a fundamental building block of neural networks. A perceptron takes multiple binary inputs, applies weights to these inputs, sums them, and then passes the result through an activation function to produce an output.

Image from Haykin (1998)

The input to the activation function is defined as

where to represent the input signals, to the weights and the bias. essentially represents a linear transformation of input signals through the utilization of weights and bias. In the absence of an activation function, the neural network effectively operates as a linear regression model, constraining its ability to grasp intricate patterns within data. Consequently, the introduction of non-linearity into the network is achieved through the incorporation of an activation function.

The activation function is often denoted as . The output of a neuron hence becomes

As demonstrated in the supplied Python code, a single perceptron can successfully master logical AND, OR, and NOT operations. However, it was historically revealed by Minsky and Papert (1969) that perceptrons face limitations when tackling the XOR problem, which notably played a role in triggering the initial AI winter, leading to reductions in funding for neural networks. Nevertheless, contemporary understanding has shown that a multilayer perceptron effortlessly overcomes the XOR problem.

References

Rosenblatt, F. (1958) The perceptron: a probabilistic model for information storage and organization in the brain. Psychological review, 65(6), p.386.

Minsky, M. & Papert, S. (1969) Perceptron: an introduction to computational geometry. The MIT Press, Cambridge, expanded edition, 19(88), p.2.

Gradient Cost Function

Gradient descent is an optimization algorithm used to minimise a differentiable and typically convex cost function , where represents the parameters of a model. The goal is to find the set of parameters that minimises (Mitchell, 1997). This is achieved through an iterative process of updating by moving in the direction of the negative gradient of with respect to , denoted as :

where is the learning rate, a hyperparameter that controls the size of the steps taken in the parameter space and is the gradient vector, which contains partial derivatives of with respect to each parameter . is a small positive value, typically chosen empirically. points in the direction of the steepest increase in , so the algorithm moves in the opposite direction to minimise .

The update process is repeated until a stopping criterion is met, which could be a maximum number of iterations, a target value of , or convergence conditions. The key idea is that by iteratively adjusting the parameters in the direction of steepest descent, we aim to find the local or global minimum of the cost function.

Mathematically, the update equation is equivalent to performing a Taylor series expansion of around the current parameter values and moving in the direction of the steepest decrease, effectively approximating the cost function locally as a linear function.

References

Mitchell, T.M. (1997) Machine learning.

Collaborative Discussion 2: Legal and Ethical views on ANN applications

This chapter encompasses the introductory post, peer responses written by me, and my summary post for Collaborative Discussion 2 on the topic of the Legal and Ethical views on ANN applications.

Initial Post

Generative AI, exemplified by ChatGPT, presents complex challenges in authorship, quality, ethics, and social impact (Hutson, 2021). Currently, AI-generated content is considered public domain under US and EU copyright laws due to the absence of AI's legal authorship (Smits & Borghuis, 2022). However, it is equally unclear if humans can claim authorship of AI-generated works, creating a potential risk of plagiarism due to the uncertain degree of independent intellectual effort (Bisi et al., 2023).

These legal ambiguities require urgent clarification. Beyond copyright concerns, a myriad of ethical and societal risks, encompassing social justice, individual needs, culture, and environmental impacts, must be carefully assessed and, when necessary, legally mitigated (Stahl & Eke, 2023).

Conversely, a broad spectrum of social and ethical benefits exists, spanning collective human identity, well-being, technology's role, beneficence, sustainability, health, autonomy, animal rights, social support, labour market, and financial impact (Stahl & Eke, 2023).

In concrete terms, AI could enable individually tailored content that can only be presented once and thus take into account the concrete needs of the individual (Smits & Borghuis, 2022).

However, current research highlights AI's shortcomings, particularly in logical argumentation, compared to human authors (Ma et al., 2023; Su et al., 2023).

In conclusion, despite remarkable results, GPT models remain tools rather than authors, needing both the legal basis for authorship claims and the ability to match human quality consistently. Given AI's rapid evolution, policymakers must promptly address the open questions. In addition, low-risk handling of AI should be trained.

References

Bisi, T., Risser, A., Clavert, P., Migaud, H. and Dartus, J. (2023) What is the rate of text generated by artificial intelligence over a year of publication in Orthopedics & Traumatology: Surgery & Research? Analysis of 425 articles before versus after the launch of ChatGPT in November 2022. Orthopaedics & Traumatology: Surgery & Research, p.103694.

Hutson, M. (2021) Robo-writers: the rise and risks of language-generating AI. Nature, 591(7848), pp.22-25.

Ma, Y., Liu, J., Yi, F., Cheng, Q., Huang, Y., Lu, W. and Liu, X. (2023) AI vs. human-differentiation analysis of scientific content generation. arXiv preprint arXiv, 2301, p.10416.

Smits, J. and Borghuis, T. (2022) Generative AI and Intellectual Property Rights. In Law and Artificial Intelligence: Regulating AI and Applying AI in Legal Practice (pp. 323-344). The Hague: TMC Asser Press.

Stahl, B.C. and Eke, D. (2023) The ethics of ChatGPT-Exploring the ethical issues of an emerging technology. International Journal of Information Management, 74, p.102700.

Su, Y., Lin, Y. and Lai, C. (2023) Collaborating with ChatGPT in argumentative writing classrooms. Assessing Writing, 57, p.100752.

Peer Response 1

Thank you, Danilo, for your insightful post.

The distinction between weak AI and self-aware AI is crucial, as it highlights the limitations and potentials of AI in academic tasks. The idea that AI can assist in summarizing articles and composing scientific papers is promising. As you mentioned, human oversight for correctness, consistency, and authenticity is essential, ensuring the quality and integrity of the work.

The weak AI hypothesis, as defined by Russell and Norvig (2016), posits that machines can behave in a manner that gives the appearance of intelligence. In contrast, the strong AI hypothesis asserts that machines engage in thinking processes rather than merely simulating them. Replicating human behaviour in machines may not represent the ideal approach, given the inherent diversity in human performance and that humans do not consistently excel in all tasks. A more suitable objective is to develop machines that emulate the characteristics of an "ideal human" capable of excelling in various tasks, recognizing that no single human possesses mastery in all domains (Emmert-Streib et al., 2020).

Your post would be enhanced by a more in-depth analysis of the challenges associated with using AI in academic writing. Consider incorporating research findings related to authorship and plagiarism, as demonstrated by studies such as those conducted by Smits and Borghuis (2022) and Bisi et al. (2023). This would provide a more comprehensive understanding of the implications and ethical considerations surrounding AI's role in academic writing.

References

Bisi, T., Risser, A., Clavert, P., Migaud, H. and Dartus, J. (2023) What is the rate of text generated by artificial intelligence over a year of publication in Orthopedics & Traumatology: Surgery & Research? Analysis of 425 articles before versus after the launch of ChatGPT in November 2022. Orthopaedics & Traumatology: Surgery & Research, p.103694.

Emmert-Streib, F., Yli-Harja, O. and Dehmer, M. (2020) Artificial intelligence: A clarification of misconceptions, myths and desired status. Frontiers in artificial intelligence, 3, p.524339.

Russell, S. J., and Norvig, P. (2016) Artificial intelligence: a modern approach. Harlow, England: Pearson, 1136.

Smits, J. and Borghuis, T. (2022) Generative AI and Intellectual Property Rights. In Law and Artificial Intelligence: Regulating AI and Applying AI in Legal Practice (pp. 323-344). The Hague: TMC Asser Press.

Peer Response 2

Thank you, Lojayne, for your insightful post. Your post is well-structured and adequately references recent studies in the field, enhancing its credibility.

As elucidated by Ruschemeier (2023), AI's transformative capabilities encompass not only promising advancements but also inherent risks. These risks extend beyond the individual level and encompass broader societal implications. The influence of AI on both personal and collective dimensions of society underscores the necessity of comprehensive and forward-thinking regulation to mitigate its potential adverse effects. Of particular concern is the precarious situation of those who are technologically disadvantaged and underrepresented in this evolving landscape, as they stand to bear the brunt of these challenges (Smith & Neupane, 2018). Within the European Union, the ramifications of AI's unchecked progression have led to a proactive response in the form of the Artificial Intelligence Act (AI Act) (European Commission, 2021). This legislative proposal seeks to establish a regulatory framework that governs AI development and deployment. By delineating clear guidelines and standards for AI systems, the AI Act aims to balance innovation and safeguard societal well-being.

In conclusion, the unregulated expansion of AI technologies presents a host of challenges, which encompass increased inequality, economic disturbances, social tensions, and political instability. These consequences are particularly pronounced for marginalised and technologically underprivileged individuals and communities (Smuha et al., 2021). Consequently, the need for robust and thoughtful regulatory measures, such as the AI Act, is evident to manage the transformative power of AI for the greater good while mitigating potential risks.

References

European Commission (2021) Proposal for a regulation of the European Parliament and the Council laying down harmonised rules on Artificial Intelligence (Artificial Intelligence Act) and amending certain Union legislative acts. EUR-Lex-52021PC0206.

Ruschemeier, H. (2023) AI as a challenge for legal regulation-the scope of application of the artificial intelligence act proposal. In ERA Forum (Vol. 23, No. 3, pp. 361-376). Berlin/Heidelberg: Springer Berlin Heidelberg.

Smith, M. and Neupane, S., 2018. Artificial intelligence and human development: toward a research agenda.

Smuha, N.A., Ahmed-Rengers, E., Harkens, A., Li, W., MacLaren, J., Piselli, R. and Yeung, K. (2021) How the EU can achieve legally trustworthy AI: a response to the European Commission's proposal for an artificial intelligence act. Available at SSRN 3899991.

Summary Post

The development and deployment of artificial intelligence (AI) technologies have raised significant ethical and regulatory concerns on a global scale (Ruschemeier, 2023; Smith & Neupane, 2018). The Organisation for Economic Co-operation and Development (OECD) and the United Nations Educational, Scientific and Cultural Organization (UNESCO) have taken proactive steps in addressing these concerns by formulating Principles and Recommendations on the Ethics of AI (OECD, 2023; UNESCO, 2022). These initiatives seek to provide a framework for responsible AI development and usage.

Many nations have recognised the need for guidelines that promote trustworthy AI (OECD, 2021). To this end, several countries have introduced guidelines and regulations tailored to their contexts. However, the European Commission has emerged as a notable frontrunner in the global effort to regulate AI. In April 2021, the European Commission unveiled its comprehensive proposal for the Artificial Intelligence Act (AI Act), a landmark piece of legislation (European Commission, 2021).

The AI Act is poised to take effect in the late 2025 to early 2026 timeframe and is set to redefine the ethical and regulatory landscape of AI. This legislation primarily aims to bolster rules surrounding data quality, transparency, human oversight, and accountability (Smuha et al., 2021). Moreover, it addresses ethical dilemmas and implementation challenges across various sectors, encompassing healthcare, education, finance, and energy.

One of the notable aspects of the AI Act is that it places accountability on all parties involved in AI, whether in its development, usage, import, distribution, or manufacturing (World Economic Forum, 2023; Sathe & Ruloff, 2023). This all-encompassing approach reflects the global consensus on the importance of ethical AI.

Sathe and Ruloff (2023) shed light on the practical steps companies must take to comply with the AI Act. These steps include conducting a comprehensive model inventory, categorizing models based on risk levels, and preparing for AI system management. This preparation involves assessing risks, raising awareness, designing ethical systems, assigning responsibilities, staying current with best practices, and establishing formal governance structures. These steps are integral to ensuring that AI technologies align with the principles of ethics and accountability, as envisioned by the AI Act and other international guidelines.

References

European Commission (2021) Proposal for a regulation of the European Parliament and the Council laying down harmonised rules on Artificial Intelligence (Artificial Intelligence Act) and amending certain Union legislative acts. EUR-Lex-52021PC0206.

OECD (2021) "State of implementation of the OECD AI Principles: Insights from national AI policies", OECD Digital Economy Papers, No. 311, OECD Publishing, Paris, https://doi.org/10.1787/1cd40c44-en.

OECD (2023) OECD AI Principles overview. Available from: https://oecd.ai/en/ai-principles [Accessed 28 October 2023]

Ruschemeier, H. (2023) AI as a challenge for legal regulation-the scope of application of the artificial intelligence act proposal. In ERA Forum (Vol. 23, No. 3, pp. 361-376). Berlin/Heidelberg: Springer Berlin Heidelberg.

Sathe, M., Ruloff, K. (2023) The EU AI Act: What it means for your business. Available from: https://www.ey.com/en_ch/forensic-integrity-services/the-eu-ai-act-what-it-means-for-your-business [Accessed 28 October 2023]

Smith, M. and Neupane, S., 2018. Artificial intelligence and human development: toward a research agenda.

Smuha, N.A., Ahmed-Rengers, E., Harkens, A., Li, W., MacLaren, J., Piselli, R. and Yeung, K. (2021) How the EU can achieve legally trustworthy AI: a response to the European Commission's proposal for an artificial intelligence act. Available at SSRN 3899991.

UNESCO (2022) Recommendation on the Ethics of Artificial Intelligence. Available from: https://unesdoc.unesco.org/ark:/48223/pf0000381137 [Accessed 28 October 2023]

World Economic Forum (2023) The European Union's Artificial Intelligence Act - explained. Available from: https://www.weforum.org/agenda/2023/06/european-union-ai-act-explained/ [Accessed 28 October 2023]

CNN Model Activity

During the course, I worked simultaneously on this task and the team presentation. Consequently, I opted to document my theoretical learning outcomes in this chapter, while all practical results and contributions are outlined in Chapter Development Team Project: Team Presentation.

Artificial Neural Networks (ANNs), a constituent of AI, are inspired by the brain, although they do not attempt an exact replication, as noted by Emmert-Streib et al. (2020). ANNs offer several advantages, as elucidated by Haykin (1998), including nonlinearity, versatility, adaptability to dynamic environments, confidence in decision-making, and seamless handling of contextual information.

According to Haykin (1998), a neural network can be defined as follows:

"A neural network is a massively parallel distributed processor consisting of simple processing units, which possesses a natural inclination for acquiring experiential knowledge and making it accessible for practical use. This design mirrors the brain in two fundamental aspects:

1. Knowledge acquisition from the environment occurs through a learning process. 2. Acquired knowledge is stored using interneuron connection strengths, referred to as synaptic weights."

Prominent authors like Krizhevsky & Hinton (2009), Goodfellow (2016), and Thoma (2017) endorse Convolutional Neural Networks (CNNs) as the standard in image processing. Their recommended architecture combines convolutional and pooling layers for feature learning, complemented by feedforward layers for classification.

Convolutional layers specialise in capturing local patterns and features within input images, including edges, textures, and intricate visual elements that enhance the image's structure. In parallel, pooling layers are crucial for reducing the spatial dimensions (width and height) of the feature maps generated by the convolutional layers.

Validation Set

The validation set is a cornerstone in machine learning, serving multiple crucial roles. It assesses a model's ability to generalise to new data, fine-tunes hyperparameters without training bias, prevents overfitting, and enables early stopping when performance plateaus or degrades.

The Validation Set plays key roles:

- Model Evaluation: Evaluates the model's generalization.

- Hyperparameter Tuning: Guides parameter adjustments without overfitting risks.

- Overfitting Prevention: Detects and mitigates overfitting.

- Early Stopping: Halts training when validation performance stagnates or declines.

Using the test set as a validation set risks data leakage, over-optimistic estimates, and poor generalization. The test set should solely assess the model's generalization performance, maintaining independence from training data and preventing data leakage.

Crucial considerations:

- Independence: Keep the validation set separate from training data.

- Data Leakage Prevention: Safeguard against unintended data leakage.

- Reserved Test Set: Save the test set for final performance assessment.

Activation Functions

During training, a neural network fine-tunes its neurons by updating their weights and biases. When the network's output significantly deviates from the actual target, it computes the error and proceeds with the essential process of "back-propagation." The main differences between neurons in a Convolutional Neural Network (CNN) and a perceptron are:

Local Receptive Fields: CNN neurons have localised receptive fields, while perceptrons are fully connected to the previous layer.

Weight Sharing: CNN neurons share weights within their receptive fields, enabling them to capture spatial patterns.

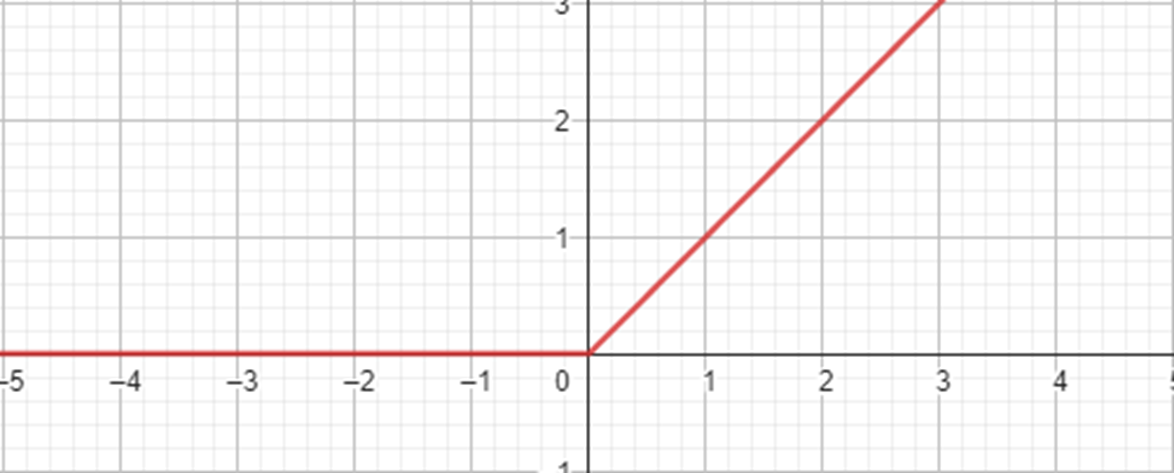

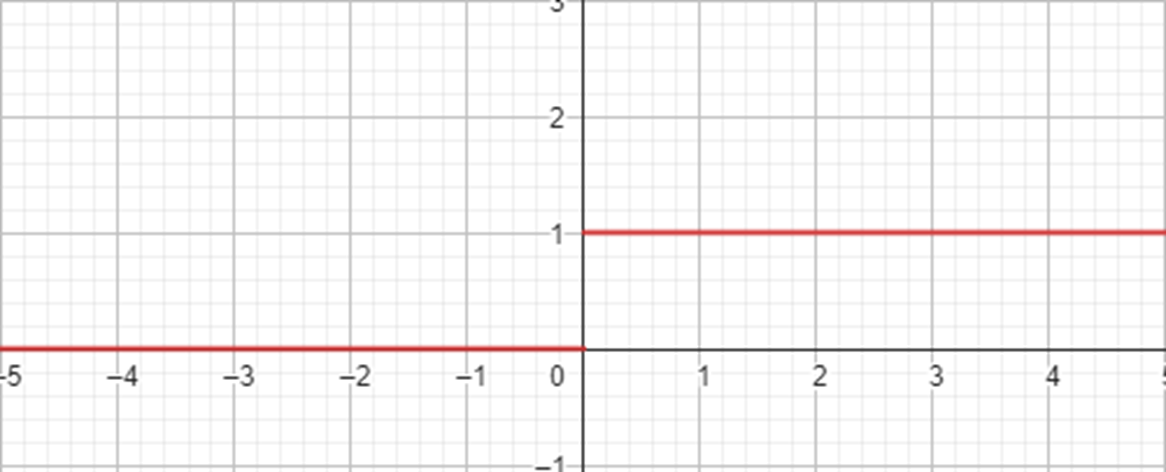

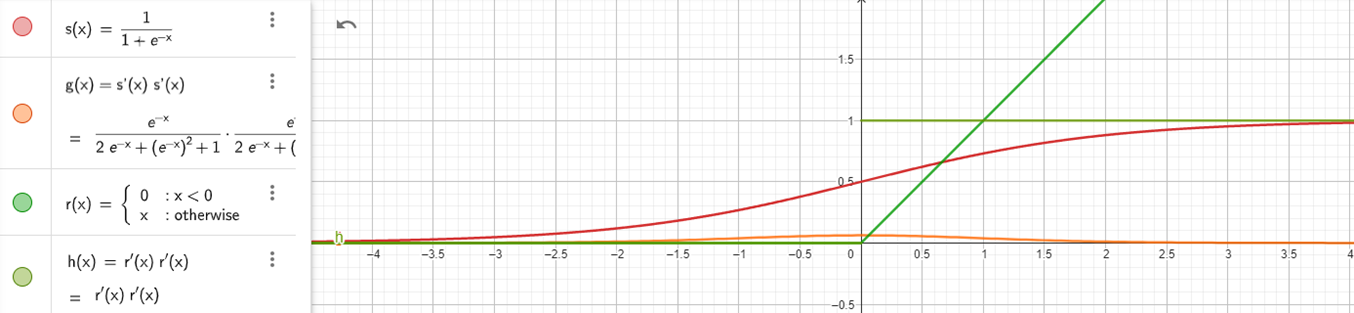

Both Convolutional Neural Networks (CNNs) and perceptrons (or artificial neurons) use activation functions to introduce non-linearity into the model. A sample of some common activation functions:

- ReLU (Rectified Linear Unit): Typically used in hidden layers for addressing vanishing gradient problems and accelerating training in deep networks.

- Sigmoid: Often employed in binary classification problems to squash output values between 0 and 1, representing probabilities.

- Binary: Appropriate for binary classification tasks where the network should produce binary output values.

- Tanh (Hyperbolic Tangent): Useful in cases where data exhibits both positive and negative values, aiding in feature scaling and capturing complex patterns.

- Softplus: Applied in specific situations for its smooth and differentiable nature, promoting numerical stability during training.

- Softmax: Commonly used in the output layer for multiclass classification, converting network scores into class probabilities.

Activation function for CNN

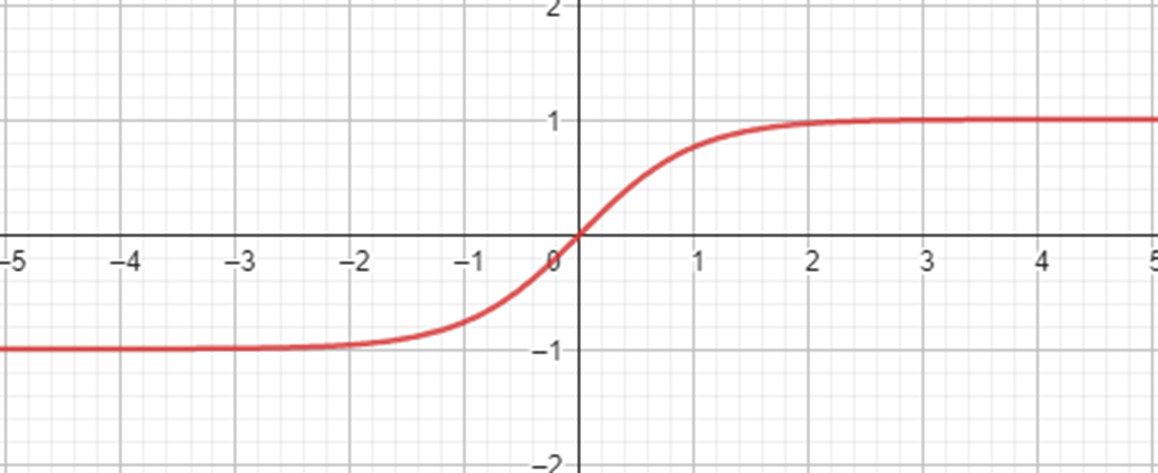

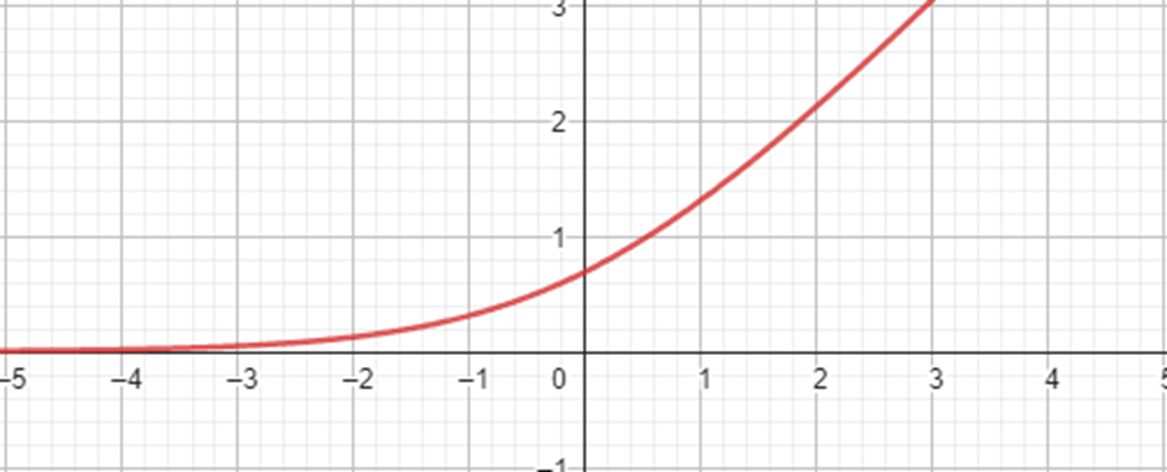

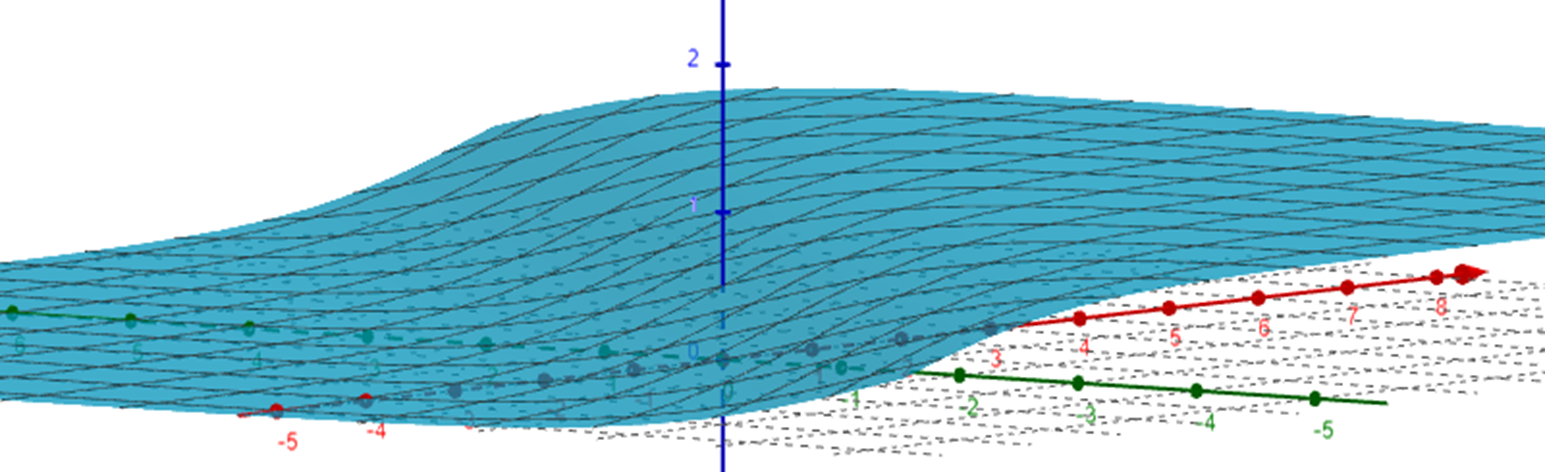

Gradients in neural networks are determined through the process of backpropagation, which essentially computes derivatives for each layer and propagates these derivatives from the final layer to the initial one using the chain rule. However, when there are "n" hidden layers employing an activation function like the sigmoid, the multiplication of "n" small derivatives results in a drastic reduction in the gradient as we move towards the initial layers. This diminished gradient significantly hinders the effective weight and bias updates during training, as depicted by the red and orange functions, where the orange function represents the cumulative derivative of the sigmoid function.

To mitigate this issue, the green functions represent the ReLU function and its derivative. The ReLU function offers a solution to the vanishing gradient problem, making it a favored choice for enhancing training efficiency in neural networks.

Furthermore, the Softmax activation function transforms numerical values or logits into probabilities. The output of Softmax is a vector referred to as "v," which comprises probabilities for each possible outcome.

Loss, Cost and Error Function

The terms "Loss," "Error," and "Cost" functions, although often used interchangeably, lack strict definitions. In this context, an error function typically represents the disparity between a prediction and the actual value, while "loss" serves to quantify the adverse outcomes stemming from these errors from a decision-theoretic standpoint. For example, models that underestimate predictions related to natural disasters or environmental pollution can be problematic due to potential health implications in the latter case. Specific loss functions are selected to address such situations.

In summary, while "Loss," "Error," and "Cost" functions are frequently used synonymously, they are conceptually distinct, with the "loss" function aiming to assess the negative consequences of prediction errors from a decision-theoretic perspective.

The selection of a loss function hinges predominantly on the nature of the problem being addressed. In general, two main categories of loss functions exist, tailored to either regression or classification tasks, each defined by its specific mathematical framework.

For regression problems, typical loss functions include:

- Mean Squared Error

- Mean Absolute Error

- ...

On the other hand, for classification tasks, common loss functions comprise:

- Binary Cross-Entropy

- Categorical Cross-Entropy

- ...

For the CIFAR-10 image classification task, involving classification into multiple classes, the Categorical Crossentropy loss function is used. This decision aligns with the recommendation from Zhang and Sabuncu (2018) in their recent paper. Categorical Crossentropy effectively penalises inaccurate predictions, particularly those significantly deviating from the correct class, motivating model prediction refinement. Notably, this loss function is sensitive to outliers and imbalanced data.

Hyperparameter tuning

Hyperparameter tuning, often referred to as hyperparameter optimization, is the process of fine-tuning the hyperparameters of a machine learning model to achieve optimal performance. Hyperparameters are settings or configurations that are not learned from the data but need to be predefined by the user.

Learning Rate

The learning rate, often denoted as , is a hyperparameter that governs the size or step length of adjustments made to the model's weights and biases during the training process. It influences how quickly or slowly a neural network or other machine learning model learns from the data.

Nazir et al. (2018) recommend a learning rate of . It's noteworthy that this value aligns with the default learning rate for the Adam optimiser in Keras. Although Keras offers dynamic learning rate scheduling options, these were not explored in this study.

Batch Size

The batch size refers to the number of data samples used in each iteration. It determines how many examples the model processes before updating its weights and biases. Keskar et al. (2016) and Thoma (2017) recommend a batch size of 32, aiming to balance training efficiency, model quality, and memory utilization, in line with the recommendation of Nazir et al. (2018).

As per Keskar et al. (2016) and Thoma (2017):

- Extremely small or large batch sizes, like 8 or 4096, prolong training convergence.

- Larger batch sizes result in more efficient training per epoch.

- Smaller batch sizes enhance model quality, contributing to improved generalization.

- Selecting batch sizes as powers of two is advantageous for memory efficiency and performance.

Regularization Techniques

Regularization encompasses adjustments made to a learning algorithm aimed at minimizing its generalization error while keeping its training error relatively unaffected (Goodfellow et al., 2016).

Dataset Augmentation

Dataset Augmentation, inspired by Perronnin et al. (2012), expands the training dataset and introduces variations to enhance generalization and overall performance.

Dropout Layers

Dropout Layers, as introduced by Srivastava et al. (2016), are instrumental in preventing overfitting by intermittently disabling connections between neurons, analogous to students occasionally missing class, thereby promoting collective learning.

Early Stopping

The early stopping strategy halts the training process when the loss exhibits non-decreasing behavior for a predefined number of consecutive iterations, as governed by the patience parameter. In these instances, the strategy reverts to the model's best weights. This approach is underpinned by the probabilistic nature of the values inherent in the loss, a characteristic stemming from the utilization of the Categorical Cross-Entropy loss function. The principal objective is to cultivate a model capable of making confident and accurate predictions for each example, rather than achieving predictions at a mere 51% confidence level.

Optimization Algorithms

Optimization algorithms in deep learning are used to find the best parameters for a neural network during training, ensuring accurate predictions, faster convergence, and robustness. They also help avoid local minima and allow for hyperparameter tuning.

The 'Adam' optimiser, an abbreviation for Adaptive Moment Estimation, plays a pivotal role in advanced model training, as highlighted by Ogundokun et al. (2022). It stands out for its dynamic learning rate adjustments, leveraging historical gradient information, and combining principles from AdaGrad and RMSProp, as demonstrated by Zou et al. (2019). This adaptability and efficiency, particularly in handling complex datasets, make 'Adam' a cornerstone in modern machine learning practices.

Ethical and social implications

In Chapter Ethical and social implications of the Development Team Project: Team Presentation, I delve into the ethical and social implications of image recognition. During my work on Collaborative Discussion 2, I came across valuable insights. Notably, both the OECD and UNESCO have furnished recommendations and guidelines for AI governance. In conjunction with the proposed AI Act by the European Commission, this juncture significantly expanded my comprehension of ethics and social risks in AI. Furthermore, as UNESCO recommendations have been accepted by member states, I offer a summarised overview here.

The UNESCO's Recommendation on the Ethics of AI addresses the ethical challenges presented by AI systems, acknowledging their potential to introduce biases, contribute to environmental degradation, pose threats to human rights, and more. The Recommendation establishes a framework comprising four core values: Respect, Protection, and Promotion of Human Rights and Fundamental Freedoms; Living in Peaceful, Just, and Interconnected Societies; Ensuring Diversity and Inclusiveness; and Environment and Ecosystem Flourishing. It further outlines ten core principles that emphasise a human-rights centered approach to AI ethics:

- Proportionality and Do No Harm: AI usage should align with legitimate aims, with risk assessment to prevent harm.

- Safety and Security: Avoid unwanted harms and vulnerabilities to attack, with the need for oversight.

- Right to Privacy and Data Protection: Protect privacy and establish data protection frameworks.

- Multi-Stakeholder and Adaptive Governance and Collaboration: Respect international law, national sovereignty, and the participation of diverse stakeholders.

- Responsibility and Accountability: Ensure auditability, oversight, impact assessment, and due diligence to avoid conflicts with human rights and environmental well-being.

- Transparency and Explainability: AI systems should be transparent and explainable, balancing with principles like privacy and safety.

- Human Oversight and Determination: AI systems should not displace human responsibility.

- Sustainability: Assess AI technologies against their impact on sustainability.

- Awareness and Literacy: Promote public understanding of AI and data through education and digital skills.

- Fairness and Non-Discrimination: Promote social justice and accessibility in AI benefits.

Additionally, the Recommendation specifies eleven key areas for policy actions, including ethical impact assessment, governance, data policy, development, environment, gender, culture, education, communication, economy, and health, to provide practical strategies for implementing these principles and values.

Hence, it is unsurprising that companies are beginning to make preparations and adapt accordingly:

- Google launched a competition for machine unlearning, focusing on the removal of data from models rather than retraining them.

- OpenAI lunches a preparedness team, to support the safety of highly-capable AI systems.

- For some time now, Google has its own AI Principles.

References

Emmert-Streib, F., Yli-Harja, O. and Dehmer, M. (2020) Artificial intelligence: A clarification of misconceptions, myths and desired status. Frontiers in artificial intelligence, 3, p.524339.

Goodfellow, I., Bengio, Y. and Courville, A. (2016) Deep learning. MIT press.

Haykin, S. (1998) Neural networks: a comprehensive foundation. Prentice Hall PTR.

Keskar, N.S., Mudigere, D., Nocedal, J., Smelyanskiy, M. and Tang, P.T.P. (2016) On large-batch training for deep learning: Generalization gap and sharp minima. arXiv preprint arXiv:1609.04836.

Krizhevsky, A. and Hinton, G. (2009) Learning multiple layers of features from tiny images.

Nazir, S., Patel, S. and Patel, D. (2018). Hyper parameters selection for image classification in convolutional neural networks. In 2018 IEEE 17th International Conference on Cognitive Informatics & Cognitive Computing (ICCI* CC) (pp. 401-407). IEEE.

OECD (2021) "State of implementation of the OECD AI Principles: Insights from national AI policies", OECD Digital Economy Papers, No. 311, OECD Publishing, Paris, https://doi.org/10.1787/1cd40c44-en.

Pal, K.K. and Sudeep, K.S. (2016) Preprocessing for image classification by convolutional neural networks. In 2016 IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT) (pp. 1778-1781). IEEE.

Perronnin, F., Akata, Z., Harchaoui, Z. and Schmid, C. (2012). Towards good practice in large-scale learning for image classification. In 2012 IEEE Conference on Computer Vision and Pattern Recognition (pp. 3482-3489). IEEE.

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I. and Salakhutdinov, R. (2014) Dropout: a simple way to prevent neural networks from overfitting. The journal of machine learning research, 15(1), pp.1929-1958.

Thoma, M. (2017). Analysis and optimization of convolutional neural network architectures. arXiv preprint arXiv:1707.09725.

UNESCO (2022) Recommendation on the Ethics of Artificial Intelligence. Available from: https://unesdoc.unesco.org/ark:/48223/pf0000381137 [Accessed 28 October 2023]

Model Performance Measurement

In this chapter, I will showcase my learning outcomes in using scikit-learn metrics for model evaluation. Scikit-learn is a powerful Python library for machine learning, and its metrics are crucial for assessing the performance of both classification and regression models. I will cover various metrics, including confusion_matrix, f1_score (macro, micro, weighted, and no average), accuracy_score, precision_score, recall_score, classification_report, roc_auc_score, roc_curve, auc, log_loss, mean_squared_error, mean_absolute_error, and r2_score.

The content of this chapter is based on the scikit-learn documentation (Scikit-learn, 2011) and the book by Hackeling (2017).

Confusion Matrix

The confusion matrix is a foundational metric for classification tasks. It provides insight into the true positives, true negatives, false positives, and false negatives, offering a clear picture of a model's classification performance. Here's an example of a confusion matrix:

from sklearn.metrics import confusion_matrix

tn, fp, fn, tp = confusion_matrix([0, 1, 0, 1], [1, 1, 1, 0]).ravel()

(tn, fp, fn, tp)(0, 2, 1, 1)F1, Accuracy, Recall, AUC, and Precision Scores

F1, Accuracy, Recall, AUC, and Precision scores are common metrics used to assess the performance of machine learning models.

F1 Score

The F1 score is a balanced metric that combines precision and recall. It is especially useful when working with imbalanced datasets. We can calculate different forms of F1 scores, including macro, micro, weighted, and no average. Here's an example:

from sklearn.metrics import f1_score

y_true = [0, 1, 2, 0, 1, 2]

y_pred = [0, 2, 1, 0, 0, 1]

print(f"Macro f1 score: {f1_score(y_true, y_pred, average='macro')}")

print(f"Micro F1: {f1_score(y_true, y_pred, average='micro')}")

print(f"Weighted Average F1: {f1_score(y_true, y_pred, average='weighted')}")

print(f"F1 No Average: {f1_score(y_true, y_pred, average=None)}")Macro f1 score: 0.26666666666666666

Micro F1: 0.3333333333333333

Weighted Average F1: 0.26666666666666666

F1 No Average: [0.8 0. 0. ]Accuracy Score

Accuracy score is a simple yet intuitive metric that measures the proportion of correctly classified instances. However, it may not be suitable for imbalanced datasets. Here's an example:

from sklearn.metrics import accuracy_score

y_pred = [0, 2, 1, 3]

y_true = [0, 1, 2, 3]

accuracy_score(y_true, y_pred)0.5Precision Score

Precision quantifies the proportion of true positive predictions among all positive predictions. It is vital in applications where false positives are costly or undesirable. An example:

from sklearn.metrics import precision_score

y_true = [0, 1, 2, 0, 1, 2]

y_pred = [0, 2, 1, 0, 0, 1]

precision_score(y_true, y_pred, average='macro')0.2222222222222222Recall Score

Recall, also known as sensitivity or true positive rate, measures the proportion of actual positive instances that the model correctly identifies. It is particularly valuable when minimizing false negatives is crucial. Here's an example:

from sklearn.metrics import recall_score

y_true = [0, 1, 2, 0, 1, 2]

y_pred = [0, 2, 1, 0, 0, 1]

recall_score(y_true, y_pred, average='macro')0.3333333333333333Classification Report

The classification report provides a comprehensive summary of various classification metrics, including precision, recall, F1 score, and support for each class in the target variable. Here's an example:

from sklearn.metrics import classification_report

y_true = [0, 1, 2, 2, 2]

y_pred = [0, 0, 2, 2, 1]

target_names = ['class 0', 'class 1', 'class 2']

print(classification_report(y_true, y_pred, target_names=target_names)) precision recall f1-score support

class 0 0.50 1.00 0.67 1

class 1 0.00 0.00 0.00 1

class 2 1.00 0.67 0.80 3

accuracy 0.60 5

macro avg 0.50 0.56 0.49 5

weighted avg 0.70 0.60 0.61 5ROC AUC Score and ROC Curve

The Receiver Operating Characteristic (ROC) and Area Under the Curve (AUC) help evaluate a model's ability to distinguish between different classes. A higher ROC AUC score indicates better discrimination performance. Here's an example of calculating ROC AUC scores and plotting an ROC curve:

from sklearn.datasets import load_breast_cancer

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import roc_auc_score

X, y = load_breast_cancer(return_X_y=True)

clf = LogisticRegression(solver="liblinear", random_state=0).fit(X, y)

roc_auc_score(y, clf.predict_proba(X)[:, 1])0.9947412927435125Log Loss

Logarithmic Loss (Log Loss) is a metric for evaluating classification model performance by quantifying the difference between predicted probabilities and true labels. Here's an example:

from sklearn.metrics import log_loss

log_loss(["spam", "ham", "ham", "spam"], [[0.1, 0.9], [0.9, 0.1], [0.8, 0.2], [0.35, 0.65]])0.21616187468057912Regression Metrics

In addition to classification metrics, scikit-learn provides essential metrics for regression tasks.

RMSE (Root Mean Squared Error)

RMSE quantifies the average squared difference between predicted and actual values, providing insights into a model's predictive accuracy. Here's an example:

from sklearn.metrics import mean_squared_error

y_true = [3, -0.5, 2, 7]

y_pred = [2.5, 0.0, 2, 8]

mean_squared_error(y_true, y_pred)0.375MAE (Mean Absolute Error)

MAE measures the average absolute difference between predicted and actual values. It is less sensitive to outliers compared to RMSE and offers insights into the model's overall predictive accuracy. Here's an example:

from sklearn.metrics import mean_absolute_error

y_true = [3, -0.5, 2, 7]

y_pred = [2.5, 0.0, 2, 8]

mean_absolute_error(y_true, y_pred)0.5R-squared (R2) Score

The R2 score, also known as the coefficient of determination, assesses the proportion of the variance in the dependent variable that is predictable from the independent variables. A higher R2 score indicates a better-fitting regression model. Here's an example:

from sklearn.metrics import r2_score

y_true = [3, -0.5, 2, 7]

y_pred = [2.5, 0.0, 2, 8]

r2_score(y_true, y_pred)0.9486081370449679References

Hackeling, G. (2017). Mastering Machine Learning with scikit-learn. Packt Publishing Ltd.

Scikit-learn (2011) Machine Learning in Python, Pedregosa et al., JMLR 12, pp. 2825-2830

Development Team Project: Project Report

This chapter delineates my contributions to the Development Team Project. All of my research findings have been meticulously documented within a Jupyter notebook, and selected segments will be seamlessly transposed into the e-portfolio. The analysis was facilitated through the utilization of various Python libraries and requisite imports, which include:

import pandas as pd

import numpy as np

from scipy import stats

import matplotlib.pyplot as plt

import seaborn as sns

from mpl_toolkits.basemap import Basemap

from sklearn.cluster import KMeans

from sklearn.preprocessing import StandardScaler

from sklearn.ensemble import RandomForestRegressor, GradientBoostingRegressor

from sklearn.model_selection import train_test_split

from textblob import TextBlob

import statsmodels.api as sm

import statsmodels.formula.api as smf

# Load the CSV file into a Pandas DataFrame

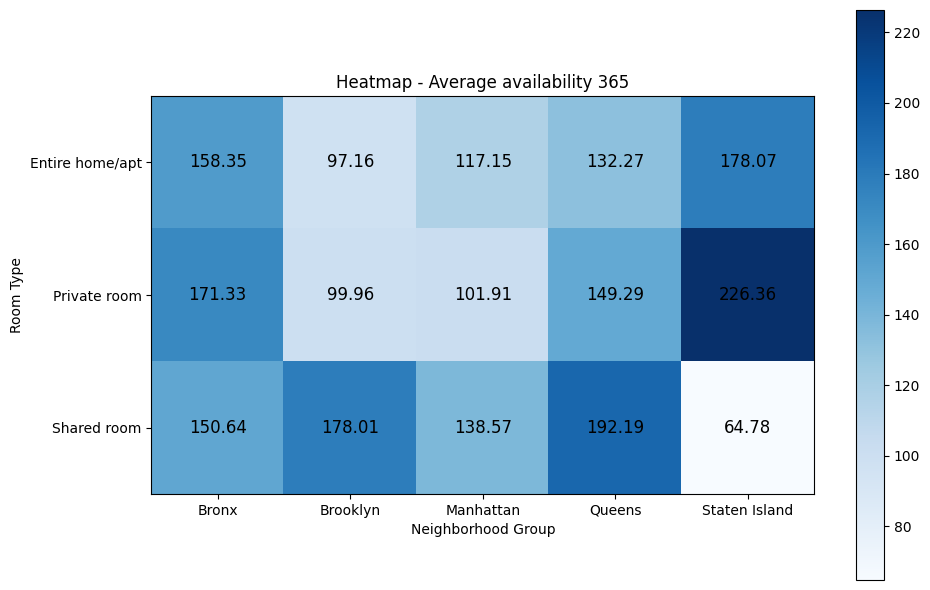

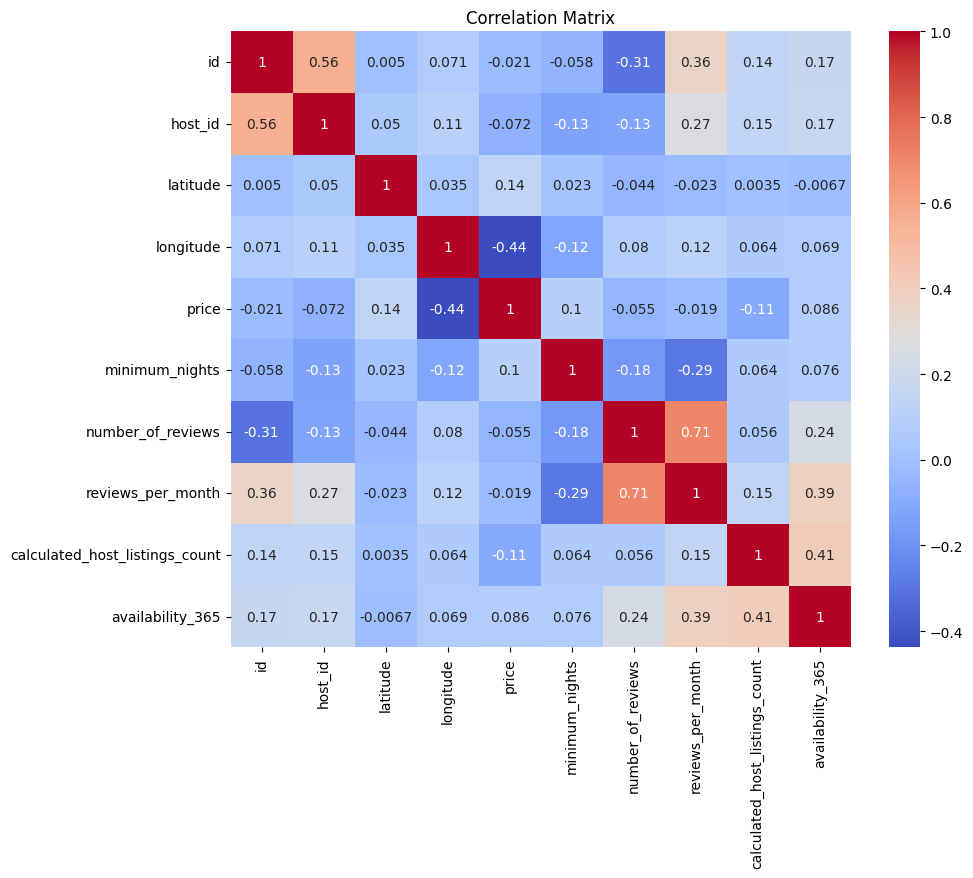

df = pd.read_csv('AB_NYC_2019.csv')Explorative Data Analysis

To begin, it is imperative to acquire a comprehensive understanding of all variables within the dataset.

df.describe(include='all')| id | name | host_id | host_name | neighbourhood_group | neighbourhood | latitude | longitude | room_type | price | minimum_nights | number_of_reviews | last_review | reviews_per_month | calculated_host_listings_count | availability_365 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 4.889500e+04 | 48879 | 4.889500e+04 | 48874 | 48895 | 48895 | 48895.000000 | 48895.000000 | 48895 | 48895.000000 | 48895.000000 | 48895.000000 | 38843 | 38843.000000 | 48895.000000 | 48895.000000 |

| unique | NaN | 47905 | NaN | 11452 | 5 | 221 | NaN | NaN | 3 | NaN | NaN | NaN | 1764 | NaN | NaN | NaN |

| top | NaN | Hillside Hotel | NaN | Michael | Manhattan | Williamsburg | NaN | NaN | Entire home/apt | NaN | NaN | NaN | 2019-06-23 | NaN | NaN | NaN |

Subsequently, ascertain the quantity of missing data present in the dataset.

df.isnull().sum()id 0

name 16

host_id 0

host_name 21

neighbourhood_group 0

neighbourhood 0

latitude 0

longitude 0

room_type 0

price 0

minimum_nights 0

number_of_reviews 0

last_review 10052

reviews_per_month 10052

calculated_host_listings_count 0

availability_365 0

dtype: int64In the absence of any reviews, the variables last_review and reviews_per_month are populated with null values. It is essential to conduct an evaluation of the missing values in the host and host_name variables.

df[df['name'].isnull()]| id | name | host_id | host_name | neighbourhood_group | neighbourhood | latitude | longitude | room_type | price | minimum_nights | number_of_reviews | last_review | reviews_per_month | calculated_host_listings_count | availability_365 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2854 | 1615764 | NaN | 6676776 | Peter | Manhattan | Battery Park City | 40.71239 | -74.01620 | Entire home/apt | 400 | 1000 | 0 | NaN | NaN | 1 | 362 |

| 3703 | 2232600 | NaN | 11395220 | Anna | Manhattan | East Village | 40.73215 | -73.98821 | Entire home/apt | 200 | 1 | 28 | 2015-06-08 | 0.45 | 1 | 341 |

| 5775 | 4209595 | NaN | 20700823 | Jesse | Manhattan | Greenwich Village | 40.73473 | -73.99244 | Entire home/apt | 225 | 1 | 1 | 2015-01-01 | 0.02 | 1 | 0 |

df[df['host_name'].isnull()]| id | name | host_id | host_name | neighbourhood_group | neighbourhood | latitude | longitude | room_type | price | minimum_nights | number_of_reviews | last_review | reviews_per_month | calculated_host_listings_count | availability_365 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 360 | 100184 | Bienvenue | 526653 | NaN | Queens | Queens Village | 40.72413 | -73.76133 | Private room | 50 | 1 | 43 | 2019-07-08 | 0.45 | 1 | 88 |

| 2700 | 1449546 | Cozy Studio in Flatbush | 7779204 | NaN | Brooklyn | Flatbush | 40.64965 | -73.96154 | Entire home/apt | 100 | 30 | 49 | 2017-01-02 | 0.69 | 1 | 342 |

| 5745 | 4183989 | SPRING in the City!! Zen-Style Tranquil Bedroom | 919218 | NaN | Manhattan | Harlem | 40.80606 | -73.95061 | Private room | 86 | 3 | 34 | 2019-05-23 | 1.00 | 1 | 359 |

Given the absence of a discernible rationale for the missing values, it is advisable to eliminate them from the dataset.

df = df.dropna(subset=['host_name', 'name'])

df.isnull().sum()id 0

name 0

host_id 0

host_name 0

neighbourhood_group 0

neighbourhood 0

latitude 0

longitude 0

room_type 0

price 0

minimum_nights 0

number_of_reviews 0

last_review 10037

reviews_per_month 10037

calculated_host_listings_count 0

availability_365 0

dtype: int64Subsequently, proceed to identify and categorise the columns as either categorical or numeric, while also assessing their scale, as this step is crucial for subsequent encoding processes.

categorical_cols = df.select_dtypes(include=['object', 'category']).columns

numeric_cols = df.select_dtypes(include=['int', 'float']).columns

categorical_colsIndex(['name', 'host_name', 'neighbourhood_group', 'neighbourhood',

'room_type', 'last_review'],

dtype='object')numeric_colsIndex(['id', 'host_id', 'latitude', 'longitude', 'price', 'minimum_nights',

'number_of_reviews', 'reviews_per_month',

'calculated_host_listings_count', 'availability_365'],

dtype='object')In conjunction with the df.describe(include='all') output provided earlier, the variable scales have been identified and are summarised in the following table.

| Nominal | Ordinal | Interval | Ratio |

|---|---|---|---|

| name | last_review | latitude | id |

| host_name | longitude | host_id | |

| neighbourhood_group | price | ||

| neighbourhood | minimum_nights | ||

| room_type | number_of_reviews | ||

| reviews_per_month | |||

| calculated_host_listings_count | |||

| availability_365 |

It is important to note that last_review is considered an ordinal variable since it allows for meaningful comparisons. It will be converted into a numerical variable during a later stage of the analysis.

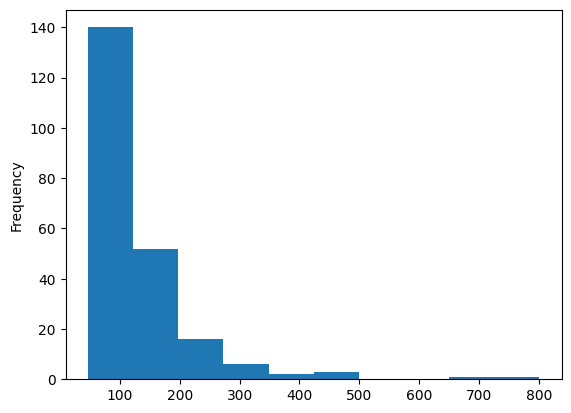

The subsequent step involves examining the distribution of all numerical variables within the dataset.

# initialise figure with 5 subplots in a row

fig, ax = plt.subplots(2, 5, figsize=(10, 6))

index = 0

for i, row in enumerate(ax):

for j, cell in enumerate(row):

if index < 0 or index >= len(numeric_cols):

break

# draw boxplot

sns.boxplot(data=df[numeric_cols[index]], ax=ax[i][j])

ax[i][j].set_xlabel(numeric_cols[index])

index += 1

cell.set_xticklabels([])

plt.subplots_adjust(left=1, bottom=0.1, right=2.5, top=2, wspace=0.5, hspace=0.4)

plt.show()

Indeed, there is evidence of numerous outliers within the price, minimum_nights, number_of_reviews (and consequently, reviews_per_month), and calculated_host_listings_count variables.

Certainly, let's proceed with an investigation into these outliers.

As a first step in investigating the outliers, we will examine whether all host_ids have consistent values for calculated_host_listings_count. This analysis will help us determine if the calculation of calculated_host_listings_count was applied consistently when recording the listings.

# Group by 'host_id' and calculate the maximum and minimum values of 'calculated_host_listings_count' within each group

grouped = df.groupby('host_id')['calculated_host_listings_count'].agg(['max', 'min'])

# Check if all groups have the same maximum and minimum values

all_same = grouped['max'].eq(grouped['min']).all()

if all_same:

print("All distinct host_id values have the same calculated_host_listings_count.")

else:

print("Distinct host_id values have different calculated_host_listings_count values.")All distinct host_id values have the same calculated_host_listings_count.Given that the calculated_host_listings_count values are consistent for each host_id, we can assume that the specific value used for subsequent calculations is not critical.

Next, we should identify the point at which we observe an inflection or "knee" in the distribution of listings per host.

# Group by 'host_id' and aggregate distinct values of 'calculated_host_listings_count'

unique_hosts_and_counts = df.groupby(

'host_id')['calculated_host_listings_count'].agg(lambda x: max(x)

).reset_index()

# Filter to include only rows where distinct 'calculated_host_listings_count' values are greater than 1

filtered_hosts = unique_hosts_and_counts[

unique_hosts_and_counts['calculated_host_listings_count']

.apply(

lambda x: x > 1 and x <= 20

)

]

sorted_hosts = filtered_hosts.sort_values(by='calculated_host_listings_count', ascending=False)

# Calculate the knee point for the line plot

hist, bins = np.histogram(

sorted_hosts['calculated_host_listings_count'],

bins=range(

int(min(sorted_hosts['calculated_host_listings_count'])),

int(max(sorted_hosts['calculated_host_listings_count'])) + 1

)

)

# Create a line plot

plt.figure(figsize=(8, 6))

plt.plot(bins[:-1], hist, marker='o', linestyle='-')

plt.xlabel('calculated_host_listings_count')

plt.ylabel('Frequency')

plt.title('Line Plot to find Knee Point')

plt.grid(True)

plt.show()

# Filter to include only rows where distinct 'calculated_host_listings_count' values are greater than 1

filtered_hosts = unique_hosts_and_counts[

unique_hosts_and_counts['calculated_host_listings_count']

.apply(lambda x: x >= 5)

]

sorted_hosts = filtered_hosts.sort_values(by='calculated_host_listings_count', ascending=False)

pd.set_option('display.max_rows', 50)

sorted_hosts

| host_id | calculated_host_listings_count | |

|---|---|---|

| 34615 | 219517861 | 327 |

| 29379 | 107434423 | 232 |

| 19557 | 30283594 | 121 |

| 31050 | 137358866 | 103 |

| 14426 | 16098958 | 96 |

| ... | ... | ... |

| 20631 | 34614054 | 5 |

| 20284 | 33214549 | 5 |

| 33505 | 191338162 | 5 |

| 20103 | 32545798 | 5 |

| 27136 | 76192815 | 5 |

514 rows × 2 columns

The identified knee point occurs at 5 listings, so we will label all hosts with 5 or more listings as is_professional.

During the descriptive analysis, two hosts, "Sonder (NYC)" with 96 listings and "Sonder" with 327 listings, were noted. Depending on the analysis, you may consider merging the data of these two hosts.

Other attempts to determine if a host is professional (i.e., a company) using methods such as "nltk (Natural Language Toolkit)" and manual methods did not yield successful results.

The next step involves verifying if the host_name values are unique.

# Group the DataFrame by 'host_name' and get unique 'calculated_host_listings_count' values within each group

grouped = df.groupby('host_name')['calculated_host_listings_count'].unique()

# Filter groups where there is more than one unique value for 'calculated_host_listings_count'

different_counts = grouped[grouped.apply(lambda x: len(x) > 1)]

# Print the 'host_name' values with different 'calculated_host_listings_count'

print(different_counts.index.tolist())['(Email hidden by Airbnb)', 'A', 'A. Kaylee', 'A.B.', 'A.J.', 'Aaron', ..., 'Zoe', 'Zoey', 'Zsofia']Since the "host_name" values are not unique, it is advisable to use the "host_id" as the basis for grouping in order to calculate the "is_professional" variable.

def is_professional(count):

return 1 if count >= 5 else 0

# Apply the function to create the 'is_professional' variable

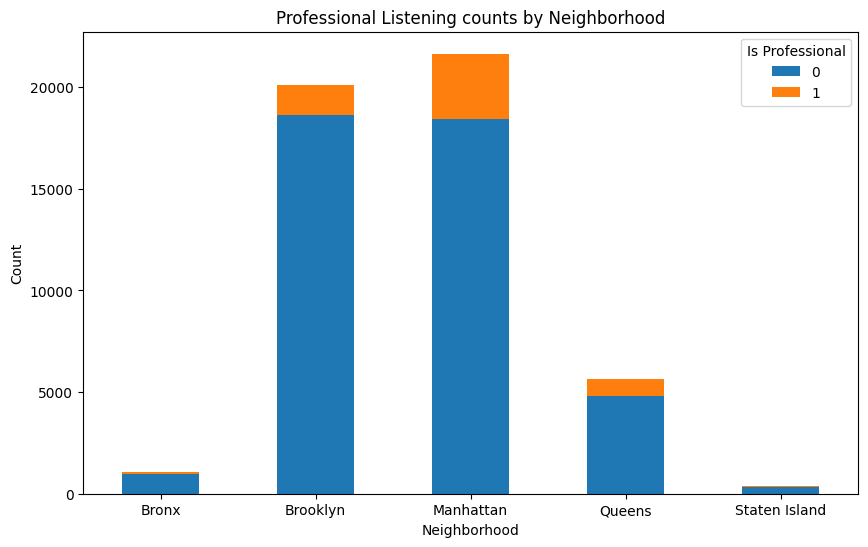

df['is_professional'] = df['calculated_host_listings_count'].apply(is_professional)Next, we should proceed to determine the number of listings that are most likely from a professional real estate owner. This can be achieved by analyzing the is_professional variable we previously defined, where hosts with 5 or more listings were marked as is_professional.

# Get the value counts for 'is_professional' column

value_counts = df['is_professional'].value_counts()

# Calculate relative values as percentages

relative_values = (value_counts / value_counts.sum()) * 100

print('Absolute numbers:')

print(value_counts)

print('')

print('Relative numbers:')

print(relative_values)Absolute numbers:

0 43218

1 5640

Name: is_professional, dtype: int64

Relative numbers:

0 88.456343

1 11.543657

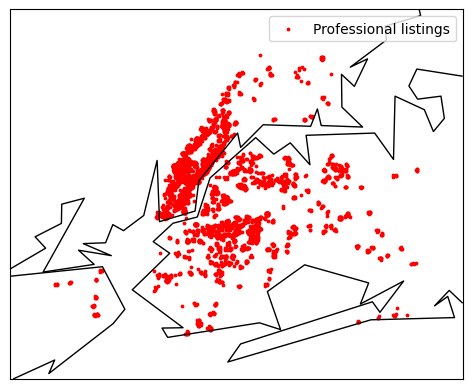

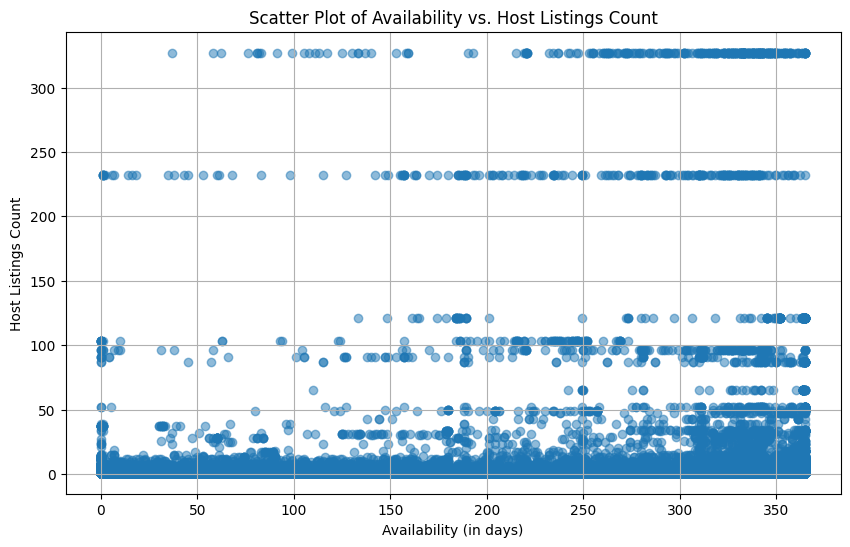

Name: is_professional, dtype: float64To gain further insights into professional listings, let's create visualizations to plot their locations and compare them by neighborhood. This will help us understand the distribution of professional listings in different areas.

prof_df = df[df['is_professional'] == 1]

m = Basemap(

llcrnrlon=prof_df['longitude'].min() - 0.05,

llcrnrlat=prof_df['latitude'].min() - 0.05,

urcrnrlon=prof_df['longitude'].max() + 0.05,